DeepSeek V3: The Open-Source AI Model Challenging ChatGPT

On December 26, 2024, DeepSeek, a Chinese AI startup, launched DeepSeek-V3. This powerful open-source AI language model is already challenging the dominance of OpenAI’s ChatGPT. With its advanced capabilities and open accessibility, DeepSeek-V3 is positioning itself as a serious competitor in the rapidly evolving AI landscape.

In this article, we’ll explore what makes DeepSeek-V3 unique, how it compares to ChatGPT, and what its rise means for AI development. As more developers and researchers turn to open-source solutions, DeepSeek-V3’s potential to reshape the AI field becomes clear.

Background on DeepSeek

DeepSeek is a Chinese AI startup founded in 2023. Its mission is to push the boundaries of artificial intelligence and contribute to the development of artificial general intelligence (AGI). The company quickly gained attention for its innovative approach, focusing on creating powerful yet cost-effective models.

One of DeepSeek’s biggest achievements is DeepSeek-V3. The model was trained in just two months, with an estimated cost of $5.5 million. Despite challenges such as limited access to advanced hardware like Nvidia’s H800 GPUs, DeepSeek optimized its resources efficiently.

Through this approach, DeepSeek has produced models that rival industry leaders such as OpenAI’s ChatGPT. The company’s focus on efficiency, combined with its commitment to open-source development, has made DeepSeek-V3 a highly capable and accessible AI model for developers and researchers worldwide.

Technical Specifications of DeepSeek V3

DeepSeek-V3 represents a major leap in natural language processing. It uses a Mixture-of-Experts (MoE) architecture, with 671 billion total parameters and 37 billion activated per token. This innovative design strikes a balance between power and efficiency, making it effective for a wide range of AI tasks.

The model was trained on 14.8 trillion high-quality tokens. This extensive training ensures that DeepSeek-V3 has a deep understanding of various domains, allowing it to generate accurate and contextually relevant responses.

Additionally, DeepSeek-V3 uses FP8 mixed precision to enhance training efficiency. This speeds up computations, reduces resource usage, and cuts down the overall cost of training. As a result, DeepSeek-V3 is not only powerful but also cost-effective and faster to deploy in real-time applications.

Benchmark Performance: A Challenger to ChatGPT

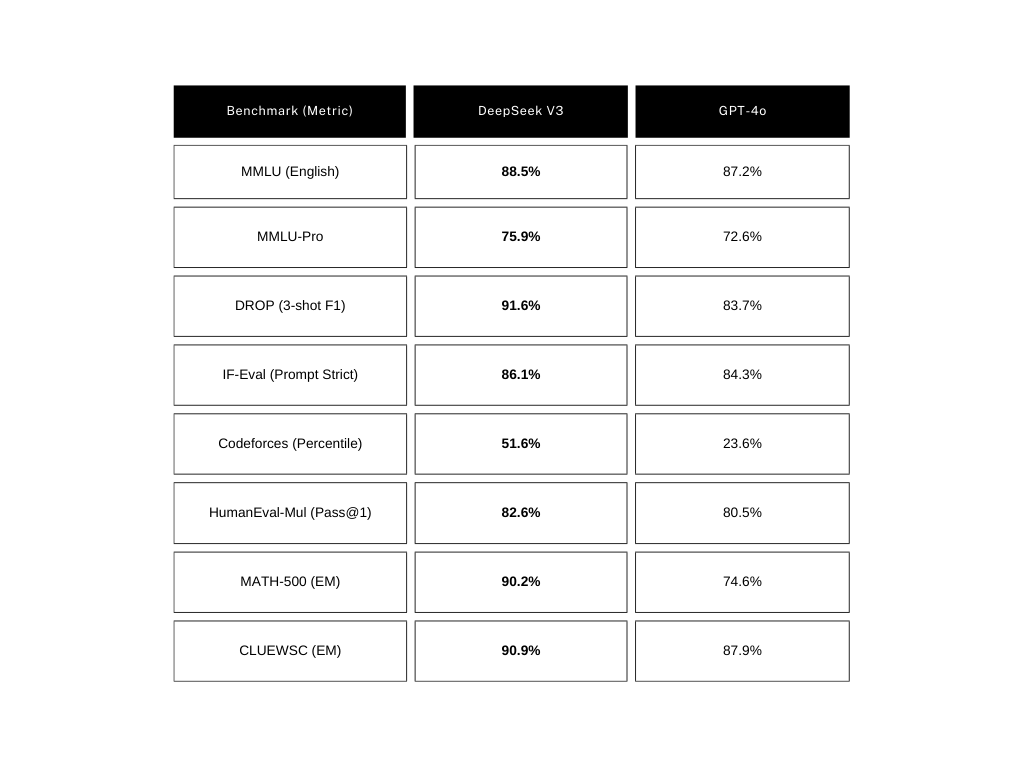

DeepSeek-V3 has proven itself as a strong competitor in the AI space. It achieved 88.5% on the MMLU (English) benchmark, surpassing GPT-4o’s score of 87.2%. In more specialized tasks, such as MMLU-Pro, DeepSeek-V3 scored 75.9%, showing significant improvements over its predecessor, DeepSeek-V2.

The model excelled in reasoning tasks, including DROP (3-shot F1) with 91.6% and IF-Eval (Prompt Strict) at 86.1%, demonstrating its versatility. In coding tasks, DeepSeek-V3 ranked 51.6% on the Codeforces benchmark and 82.6% on HumanEval-Mul (Pass@1), outperforming GPT-4o and other models in competitive programming.

Moreover, DeepSeek-V3 demonstrated impressive math skills, scoring 90.2% on MATH-500 (EM). It also excelled in Chinese-language tasks, with a 90.9% score on CLUEWSC (EM). These results position DeepSeek-V3 as a strong alternative to ChatGPT and other top-tier models in the AI field.

Conclusion

DeepSeek-V3 is rapidly emerging as a powerful challenger to ChatGPT, with its advanced capabilities, impressive benchmark performance, and open-source accessibility. Its innovative Mixture-of-Experts (MoE) architecture, combined with efficient training techniques, positions it as a cost-effective yet high-performance alternative to existing AI models. Developers and researchers now have a compelling open-source option that rivals some of the best proprietary models on the market, making DeepSeek-V3 a strong contender in the future of AI development.